– Upgrading H100 to H200

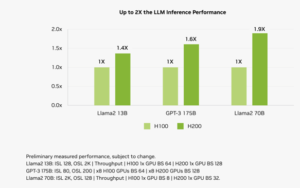

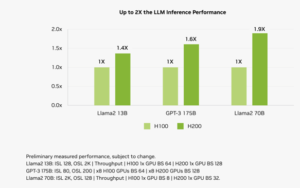

Semiconductor giant Nvidia introduced its latest artificial intelligence chip, the H200, designed to support training and deployment across various AI models. An upgraded version of the H100 chip, the H200 boasts 141GB of memory, focusing on enhancing “inference” tasks. The chip demonstrates a notable improvement of 1.4 to 1.9 times in performance compared to its predecessor, particularly in tasks such as reasoning and generating responses to questions.

Enhanced Performance and Advanced Architecture

Built on Nvidia’s “Hopper” architecture, the H200 marks the company’s first use of HBM3e memory, known for its increased speed and capacity, making it especially suitable for large language models. Nvidia’s strategic move to HBM3e memory introduces faster and larger memory, making it an ideal choice for those dealing with substantial language models. This development prompts a potential reevaluation for those who invested in the Hopper H100 accelerator, as Nvidia is likely to adjust the pricing strategy for the H200 equipped with 141GB HBM3e memory, potentially 1.5 to 2 times higher than its 80GB or 96GB HBM3 counterparts.

Performance Breakdown

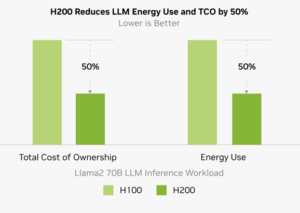

A performance comparison between the H100 and H200 in AI inference workloads reveals significant improvements, particularly in the inference speed of large models such as Llama 2. H200 exhibits nearly 2 times faster inference speeds compared to the H100, translating to a 50% reduction in actual energy consumption and total cost of ownership within the same power range.

Enhance High-Performance Computing to the Next Level

Memory bandwidth plays a pivotal role in high-performance computing (HPC) applications by facilitating swift data transfer, mitigating processing bottlenecks. Particularly in memory-intensive HPC tasks such as simulations, scientific research, and artificial intelligence, the H200’s elevated memory bandwidth guarantees efficient data access and manipulation, resulting in up to 110 times faster time to results compared to conventional CPUs.

Future Outlook and Adaptation

The utilization of a Transformer engine, reduced floating-point operation precision, and faster HBM3 memory have enhanced the H100’s inference performance. With the introduction of the H200 and its larger HBM3e memory, performance is further boosted to 18 times without any hardware or code changes. This improvement, solely attributed to increased memory capacity and bandwidth, raises speculation on the potential for future devices with even higher memory configurations.

Embracing Larger Memory Trend

The trend toward larger memory in processors is a response to decades of over-configured computing power at the expense of memory bandwidth. While the cost of advanced HBM memory often surpasses that of the chip itself, Intel’s “Sapphire Rapids” Xeon SP chip variant equipped with 64GB HBM2e memory has demonstrated a shift in this paradigm. Nvidia, following this trend, must adapt to equip its Hopper GPU with larger memory, enhancing performance without the need for additional hardware changes.

Anticipated Launch and Market Dynamics

Nvidia’s H200 GPU accelerator and the Grace-Hopper super chip are slated for an official launch in mid-next year. This aligns with the company’s technology roadmap and precedes the expected release of the “Blackwell” GB100 GPU and B100 GPU in 2024. Nvidia’s move to enhance GPU performance through expanded and faster memory, rather than relying solely on computing upgrades, signals a broader industry shift.

Potential Implications for Buyers

Potential buyers considering the purchase of Nvidia’s Hopper G200 before the next summer release may face challenges, given the anticipated advancements in memory technology. The performance improvements in inference capabilities expected from the “Blackwell” B100 GPU accelerator suggest a paradigm shift where breakthroughs in memory, rather than computing upgrades, drive advancements.

In conclusion, the H200 GPU accelerator with updated Hopper GPU and larger/faster memory is poised for a mid-2023 release. This shift underscores the industry’s acknowledgment of the critical role memory plays in overall performance, setting the stage for future innovations in GPU technology. Buyers are advised to plan their purchases accordingly to stay ahead of these advancements. To save money, buyers can also sell used GPU online.