Epoch AI’s “Top 10 Data Insights and Gradient Updates of 2025” (Image credits Epoch AI)

Epoch AI’s “Top 10 Data Insights and Gradient Updates of 2025” sheds light on several key trends that are shaping the broader AI hardware ecosystem — from cost dynamics and compute accessibility to infrastructure demand and energy considerations. These insights help frame where the AI compute market is headed and what that means for hardware supply, adoption, and lifecycle management.

1. AI Inference Costs Have Declined Substantially

One of the top insights from the report is that the cost of running large language model (LLM) inference has fallen by more than 10× from April 2023 to March 2025. Lower inference costs make AI capabilities more affordable for a wider range of users and use cases.

This trend reflects increasing efficiency in model deployment and competitive pressure among service providers. For the hardware market, it suggests that organizations may feel more comfortable investing in local compute infrastructure (including second‑hand servers and GPUs) to run models on‑premises or in hybrid environments, rather than fully relying on cloud APIs.

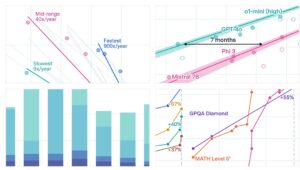

2. Frontier Model Performance Is Becoming Accessible on Consumer Hardware

Epoch AI highlights that the best open models that can run on a consumer GPU lag behind frontier AI models by only about a year or less. This convergence means the performance gap between high‑end, cutting‑edge models and consumer‑accessible models is shrinking.

This trend has profound implications for AI hardware:

-

Organizations and research teams can experiment with powerful models on more affordable hardware.

-

Consumer and workstation GPUs — including used units — become relevant compute assets for smaller labs, startups, and edge applications.

As frontier capabilities become more accessible, secondary markets for capable GPUs and capable servers are likely to expand.

3. AI Organizations Are Using Compute for Experimentation

According to the report, much of OpenAI’s 2024 compute usage was devoted to experiments instead of exclusively to training or inference.

This suggests that large AI labs and research organizations cycle hardware through different workloads and upgrade frequently to support experimentation. Such patterns can create steady supply opportunities for used compute hardware, especially as newer models of GPUs and accelerators replace older fleets.

4. Installed NVIDIA Compute Is Growing Rapidly

Epoch AI reports that the amount of installed AI compute from NVIDIA chips has more than doubled annually since 2020, and new flagship chips account for most of this compute within approximately three years of release.

This exponential growth in installed compute has several market effects:

-

Larger fleets of GPUs in production environments eventually generate secondary supply as organizations refresh their infrastructure.

-

The prominence of NVIDIA compute underlines its dominant role in AI workloads, shaping demand patterns for secondary hardware.

For the AI hardware market, rapid adoption of NVIDIA platforms often precedes a wave of used hardware availability, which can be acquired, refurbished, and resold.

5. Comparisons Between Model Generations Show Significant Leaps

The report also notes that GPT‑5 and GPT‑4 both represented major benchmark improvements compared with their predecessors.

Large performance leaps in flagship models drive demand for higher compute capacity to experiment with, benchmark, and deploy these models. That, in turn, influences how organizations plan hardware investments, including when and how they transition older units into secondary markets.

6. Energy Use Becomes More Visible

Among the Gradient Updates, one piece quantified the energy cost of an average GPT‑4o query as being relatively low — less than the energy used by running a lightbulb for several minutes.

While this suggests that per‑query energy costs are modest, overall energy consumption rises with scale. Organizations increasingly consider total energy usage and efficiency when evaluating hardware, particularly in data centers and enterprise deployments. Energy efficiency thus becomes a competitive factor in hardware valuation, especially for used equipment that can offer good performance per watt.

7. Broader Automation Is Seen as the Primary Source of AI Value

One of the most discussed insights is the argument that most of AI’s economic value will come from broad automation across the economy, rather than gains in research and development alone.

This perspective reframes how organizations think about AI hardware:

-

AI compute is increasingly a tool for operational scale, not solely for innovation research.

-

Hardware investments — including those in used markets — are evaluated based on practical capability to support deployment, automation, and production workloads.

What These Trends Mean for the AI Hardware Market

Taken together, the insights from Epoch AI highlight several evolving dynamics in the AI hardware ecosystem:

1. Demand Is Expanding Beyond Labs

Falling inference costs and increasing accessibility of frontier models are pushing AI compute beyond elite research institutions. Startups, SMBs, and edge deployments now have access to high-performance compute, broadening the pool of potential hardware buyers. Organizations are seeking capable GPUs and servers that support both experimentation and production workloads. If you are looking to sell graphics card GPUs or other compute hardware, this shift creates growing opportunities in secondary markets.

2. Secondary Markets Are Growing Through Infrastructure Turnover

Rapid compute growth and experimental workloads mean that hardware is upgraded more frequently. Organizations retiring older GPUs, servers, and memory provide a steady supply of high-quality used equipment. This turnover supports a healthy secondary market and allows smaller organizations to access affordable AI compute resources.

3. Performance and Energy Efficiency Are Increasingly Important

As deployment scale grows, energy consumption and efficiency become central considerations. Hardware that delivers strong performance per watt is particularly attractive for data centers, enterprise deployments, and organizations balancing cost and sustainability. Secondary markets that highlight energy-efficient GPUs and servers meet this demand.

4. Practical AI Use Cases Drive Hardware Value

The economic value of AI increasingly comes from practical, automated workflows rather than research compute alone. This trend emphasizes hardware that can support inference, fine-tuning, and hybrid AI operations, making secondary hardware relevant not just for labs, but for production environments across industries.

Conclusion

Epoch AI’s 2025 insights depict an AI compute landscape that is expanding in scale, accessibility, and practical application. Declining costs, democratized model performance, rapid infrastructure turnover, and automation-driven demand suggest a robust, continually evolving market for AI hardware.

For organizations managing AI infrastructure, understanding these trends helps guide strategic decisions in acquisition, resale, and deployment. Those looking to responsibly trade or repurpose hardware can leverage opportunities in the secondary market, such as selling used GPUs, to support sustainability while meeting evolving AI compute needs.